So, first things first, what exactly do we want to get out of any rating system? Any ranking system needs to be able to create a totally ordered set out of the teams in the league. This simply means that, given any two teams, either one team can be deemed better or they are equally good (or bad). Additionally, any totally ordered set maintains transitivity, e.g. if the Celtics are better than the Nuggets and the Nuggets are better than the Bobcats, then the Celtics are better than the Bobcats.

The most basic ranking system that can satisfy the requirements of a totally ordered set is a "rank-ordering". This involves ranking the $n$ teams in a league $1$ through $n$. Regular season standings are the most simple way of doing this, and as we know are not always particularly accurate. In a rank-ordering, if you take any two teams, the team with the lower number will be "better". However, this doesn't tell you how much better an individual team might be.

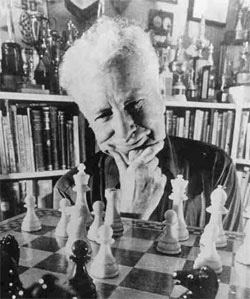

Moving from the world in which we merely rank teams against one-another to a world in which those rankings can give us some insight into exactly how much better a certain team is can be a very complicated step. This is where our good friend Arpad Elo comes in.

The Elo Rating System

|

| Arpad Elo contemplating his next move |

Initially designed as an improved rating system for use in international chess competitions, Arpad Elo ended up creating a ranking algorithm that has since been put to use in a variety of different games and settings. In the online computer game League of Legends, players are ranked using the Elo rating system, and Nate Silver (of the fivethirtyeight election forecasting blog) has implemented a modified Elo algorithm to rank baseball teams in the MLB. Elements of the Elo system are also used in helping determine the BCS rankings in NCAA football.

What is so special about this algorithm that warrants such widespread use? Well, it provides probabilistic insight into the relative skill difference between any two teams. This is incredibly important as instead of knowing that Team A is better than Team B, we can instead say that Team A has a 75% chance of winning a match against Team B.

Damn. Now, to learn exactly how this is accomplished we'll take a slightly more technical look at the actual algorithm. I'll try to use my "sick-nasty teaching skillz" to convey the mathematics behind the theory in an accessible manner, so bear with me.

| Heavy favorites UNC vs underdogs UVM |

To show how this intuitive system works mathematically we need to begin by defining some variables. We'll let $R_A$ and $R_B$ signify the Elo rating of Team A and Team B in our hypothetical encounter. These are the numerical ratings that can be used to create a rank-order of the teams. The next step involves converting these ratings into expected outcomes. To do this we begin by assigning each team a new variable that we'll call Victory Points. $V_A$ and $V_B$ will signify the Victory Points for teams A and B respectively. We define Victory points with the following formula: $$V_A=10^{R_A/400}$$

Whenever two teams play each other, the result is directly proportional to the number of Victory Points each team has. If we represent Victory Points with marbles, each team would throw all their marbles into a bucket, and the winner would be determined by randomly picking one out. Thus, if a team has twice as many Victory Points as their opponent, they will be twice as likely to win. Therefore, if we let $E_A$ represent the expected outcome for Team A in a contest against Team B, then we can define $E_A$ with the following formula: $$E_A=\frac{V_A}{V_A+V_B}$$

|

| Similar to the NBA Draft Lottery, where the worst performing teams are given more lotto balls, thereby increasing the likelihood that they get an early draft pick |

This expected outcome, $E_A$, is going to be a number between 0 and 1. If we consider a loss to be 0 and a win to be 1, this directly becomes the chance that a team will win. Now we need to figure out how a team's rating will change after a game based on the actual result. If we let $S_A$ signify the actual result for Team A ($S_A=1$ if Team A wins, and $0$ if they lose), then we can define Team A's change in rating, $\Delta_A$, with the following formula: $$\Delta_A=K(S_A-E_A)$$ This new variable $K$ is called the k-value. It is the maximum possible rating change that a team can achieve. For example, if Team A had a 100% chance of winning ($E_A=1$) according to their rating and ended up losing ($S_A=0$), their rating change would be $\Delta_A=K(S_A-E_A)=K(0-1)=-K$. The opposite is true if a team with a 0% predicted chance of winning ended up taking the game in the infinitely unlikely upset. This is a parameter that can be modified depending on how accurate the ratings are performing, but Arpad noticed that k-values between 16 and 32 worked the best with his system in Chess. Since then, similar k-values have been used successfully. In the computer game League of Legends a k-value of 24 is used. We will begin with $K=24$ in our analysis, but will experiment with other values to see if they are more or less appropriate.

Thus, if Team A has a rating of $R_A$ going into a game, it will have rating $R'_A$ at the end, where $R'_A$ is defined as: $$R'_A=R_A+K(S_A-E_A)$$ To illustrate exactly how this works, let's make up some numbers and use this formula in a real example. Let's consider that UVM vs UNC game from the 2012 NCAA men's tournament. We all know UNC was highly favored over UVM, but for the sake of argument we'll assign each team and Elo rating and look at how those rating would change depending on the actual outcome of the game. We'll begin by giving UNC a rating of $R_{UNC}=1925$ and let UVM be rated at $R_{UVM}=1650$. In this scenario, what is the expected outcome of this game?

To determine the expected outcome we have to calculate the number of Victory Points that each team will contribute towards the end result. UNCs Victory Points can be calculated using the above formulas as $V_{UNC}=10^{R_{UNC}/400}=10^{1925/400}=64,938$. Similarly we can calculate UVMs Victory Points as $V_{UVM}=10^{R_{UVM}/400}=10^{1650/400}=13,335$.

Since we know the expected outcome of the game is directly proportional to the Victory Points we then know that the chance UNC wins is equal to $E_{UNC}=\frac{V_{UNC}}{V_{UNC}+V_{UVM}}=\frac{64,938}{64,938+13,335}=83\%$. Similarly UVMs chances can be found to be $E_{UVM}=\frac{V_{UVM}}{V_{UNC}+V_{UVM}}=\frac{13,335}{64,938+13,335}=17\%$.

If we assume a k-value of 24 as discussed earlier, we can then determine each teams new ratings depending on the outcome of the game. Let's take the more likely scenario where UNC wins ($S_{UNC}=1$ and $S_{UVM}=0$). UNCs new rating will be defined as $R'_{UNC}=R_{UNC}+K(S_{UNC}-E_{UNC})$$=1925+24(1-.83)=1929$. Similarly UVMs new rating will be $R'_{UVM}=R_{UVM}+K(S_{UVM}-E_{UVM})$$=1650+24(0-.17)=1646$ As you can see, UNC's rating will increase by 4 points while UVM will drop 4 points.

However, what happens if UVM pulls out the upset victory? In this case ($S_{UNC}=0$ and $S_{UVM}=1$) we have different changes in the teams' ratings. Here we see UNC drops to $R'_{UNC}=R_{UNC}+K(S_{UNC}-E_{UNC})$$=1925+24(0-.83)=1905$ while UVM jumps up to $R'_{UVM}=R_{UVM}+K(S_{UVM}-E_{UVM})$$=1650+24(1-.17)=1670$. Thus UNC and UVM will lose and gain 20 points of rating respectively. This again makes intuitive sense, as poor performing teams should be more greatly rewarded for toppling an established giant than they should be punished for losing a game they had no right to win.

At this point in our journey, I'd like to take a moment for some self-reflection. If you've made it this far and are not utterly confused, please give yourself a hearty pat on the back, and thanks for legitimizing this exercise in mathematical education for myself. If there's anything that's unclear, leave a comment below and I can try to provide an explanation from a different perspective.

Stay tuned for a post in the next couple of days highlighting where in this process we can make modifications, and what some of the most necessary and simple modifications might be. For example, where can we incorporate home-court advantage into this process?

No comments:

Post a Comment